Researchers at Tokyo Institute of Technology (Tokyo Tech) and Carnegie Mellon University have together developed a new human motion capture system that consists of a single ultra-wide fisheye camera mounted on the user's chest. The simplicity of their system could be conducive to a wide range of applications in the sports, medical and entertainment fields.

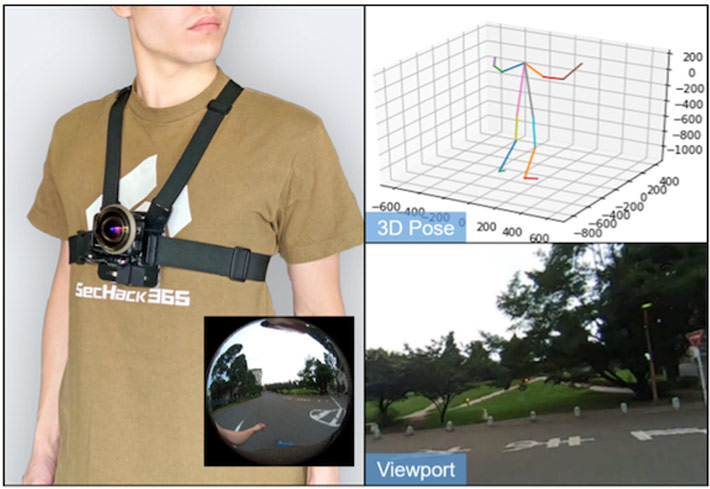

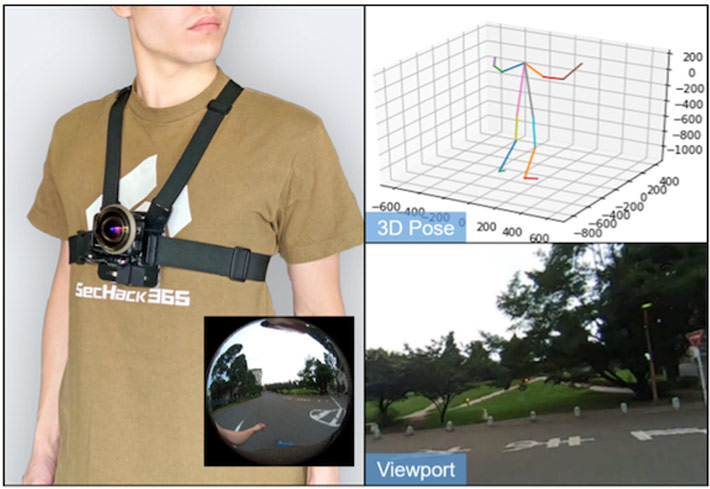

Figure 1. MonoEye captures 3D body pose as well as the user's perspective

MonoEye is based on a single ultra-wide fisheye camera worn on the user's chest, enabling activity capture in everyday life.

Computer vision-based technologies are advancing rapidly owing to recent developments in integrating deep learning. In particular, human motion capture is a highly active research area driving advances for example in robotics, computer generated animation and sports science.

Conventional motion capture systems in specially equipped studios typically rely on having several synchronized cameras attached to the ceiling and walls that capture movements by a person wearing a body suit fitted with numerous sensors. Such systems are often very expensive and limited in terms of the space and environment in which the wearer can move.

Now, a team of researchers led by Hideki Koike at Tokyo Tech present a new motion capture system that consists of a single ultra-wide fisheye camera mounted on the user's chest. Their design not only overcomes the space constraints of existing systems but is also cost-effective.

Named MonoEye, the system can capture the user's body motion as well as the user's perspective, or 'viewport'. "Our ultra-wide fisheye lens has a 280-degree field-of-view and it can capture the user's limbs, face, and the surrounding environment," the researchers say.

To achieve robust multimodal motion capture, the system has been designed with three deep neural networks capable of estimating 3D body pose, head pose and camera pose in real-time.

Already, the researchers have trained these neural networks with an extensive synthetic dataset consisting of 680,000 renderings of people with a range of body shapes, clothing, actions, background and lighting conditions, as well as 16,000 frames of photo-realistic images.

Some challenges remain, however, due to the inevitable domain gap between synthetic and real-world datasets. The researchers plan to keep expanding their dataset with more photo-realistic images to help minimize this gap and improve accuracy.

The researchers envision that the chest-mounted camera could go on to be transformed into an everyday accessory such as a tie clip, brooch or sports gear in future.

The team's work will be presented at the 33rd ACM Symposium on User Interface Software and Technology (UIST), a leading forum for innovations in human–computer interfaces, to be held virtually on 20–23 October 2020.

Reference

Researchers : |

Dong-Hyun Hwang1, Kohei Aso1, Ye Yuan2, Kris Kitani2, Hideki Koike1,* |

Session 3A : |

|

Title : |

MonoEye: Multimodal Human Motion Capture System Using A Single Ultra-Wide Fisheye Camera. |

Conference : |

|

Affiliations : |

1 Tokyo Institute of Technology

2 Carnegie Mellon University

|

. Any information published on this site will be valid in relation to Science Tokyo.