From booking train tickets to keeping in touch with our loved ones, people are constantly communicating through, shaping, and being influenced by computers. Understanding and improving this two-way relationship or "interaction" is the core of the field of "human–computer interaction." Current technologies often seek to reproduce "human–human" relationships with computers. But our high expectations, social biases, and imperfect understanding of ourselves limit the design of these technologies and the success of the experiences that they provide. Katie Seaborn, Associate Professor in the Department of Industrial Engineering and Economics and head of the Lab for Aspirational Computing at Tokyo Tech (ACTT), recognizes these issues better than most. Her groundbreaking research is helping to resolve these issues, forcing people to critically evaluate their contributions to technology and helping to envision a more positive, inclusive, and socially responsible future. Seaborn joined Tokyo Tech in April 2020. Here, we introduce her fascinating research and discuss the future of technology.

Advancing knowledge with human–computer interaction

What kind of research do you do?

My research is in a field called interaction design. Now, interaction design involves a combination of other areas of research and industry, like computer science and industrial engineering and even the arts, psychology, and anthropology. My specialization is in "human–computer interaction": I look at the relationship between people and the variety of computer-based systems, products, and services that they use. At the core of this field is the "human factor": the social, psychological, cognitive, and physical attributes of people that influence, or are influenced by, interaction with things in the world, in my case computer-based things. While computers may change—for example, we now focus more on smartphones than on desktop computers—the "human factor" remains.

When designing technologies, creators tend to rely on human models, even subconsciously. For some, replicating humanity in machines is the dream. But there is also a danger that we must contend with—we may not understand ourselves well enough to recreate humanity in machines beyond stereotyped and superficial ways. Alexa and Siri are cases in point. They both represent the current standard of "virtual young women assistants," replicating a human model of traditional gender roles where women are cast as servile helpers in society. There is a considerable age-bias in technology as well. You don't get to see aged avatars in virtual assistants or hear aged voices. These biases are well-established. What we need to do, and what I'm trying to do, is work on solutions. This is the hard part that needs much creativity and academic nourishment. Only now are we recognizing the need for social justice within technology and research spaces at a large enough scale to take action and be taken seriously by the global research community.

Human Factors Engineering

Exploring the human factor in interfaces, interactions, and experiences

Serious Games & Gamification

Applying playful strategies and crafting games for serious pursuits

Inclusive Design & Critical Computing

Designing to support diversity, from age to disability and beyond

Attitude & Behaviour Change Methods

Considering psychosocial and cognitive factors for human betterment

Research themes of the Lab for Aspirational Computing at Tokyo Tech (ACTT).

A lot of my research has focused on achieving attitude and behavior change through strategies like gamification and gameful design. I've tended to focus on evaluating the effects and contributions of playful experiences on the lives of specific user groups, like older adults or people with disabilities. I think something that really highlights how our perceptions on social justice and bias have changed is that older adults were a "niche" group when I started working with them a decade ago. Now our rapidly ageing societies have pushed "the older adult user" to the forefront of research.

Overall, it is an exciting time to enact change, create cool stuff, and contribute to human knowledge, all at once!

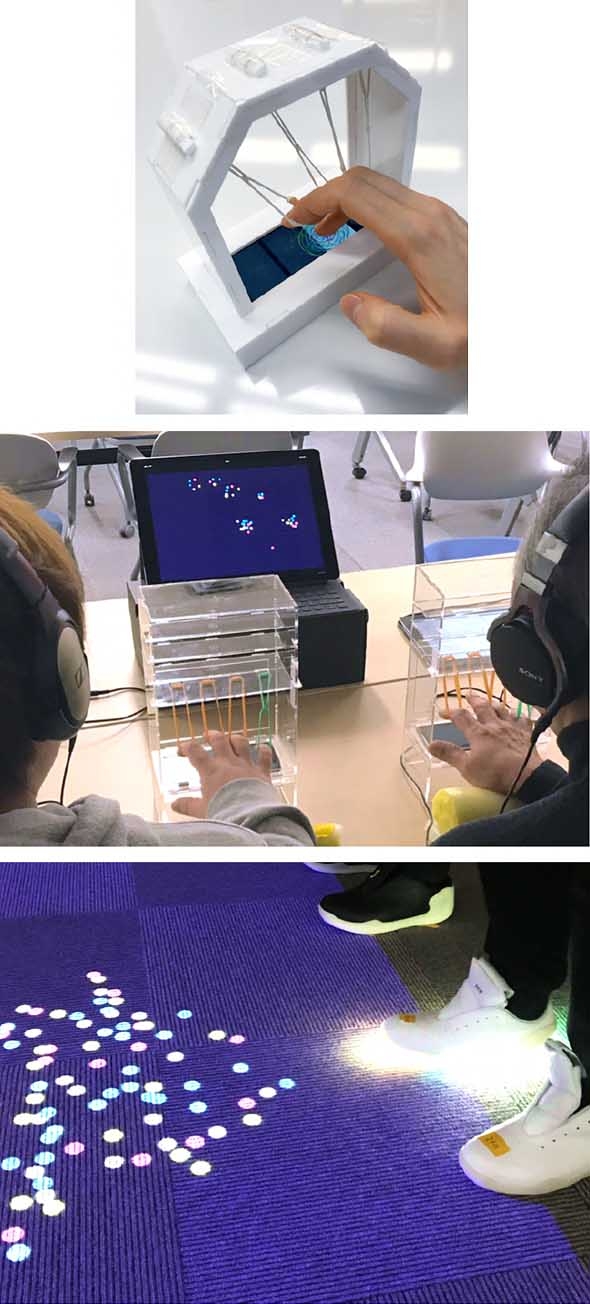

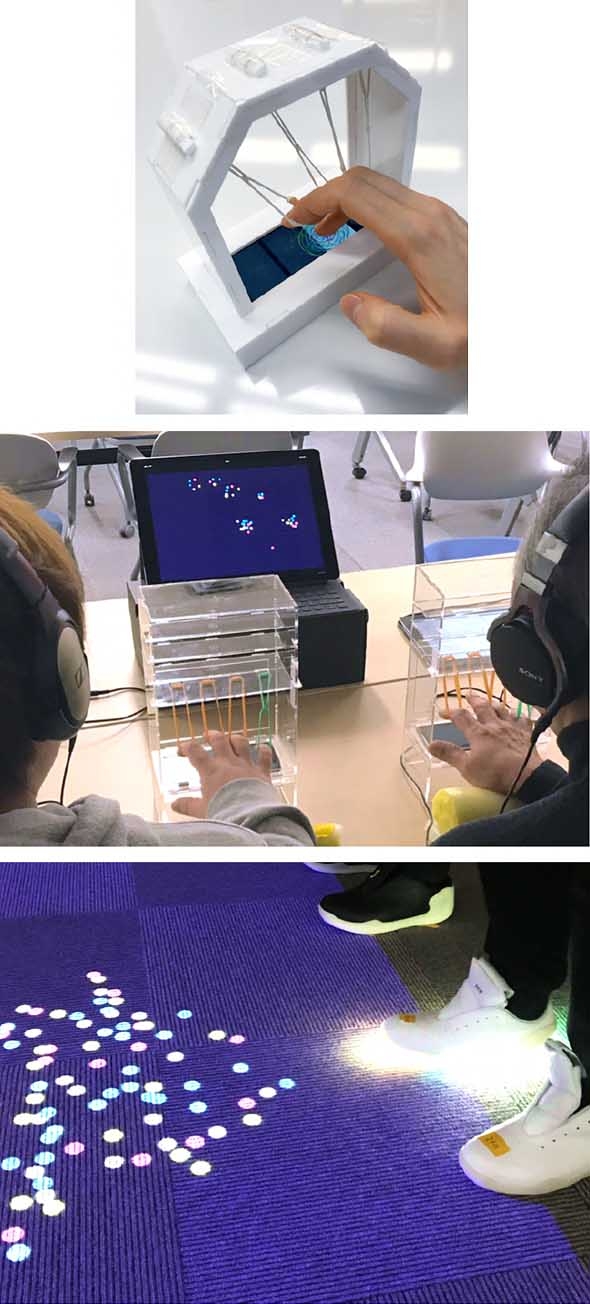

An intergenerational shared action game system created by Seaborn and her team and reported on in her paper entitled "Intergenerational shared action games for promoting empathy between Japanese youth and elders" published in the proceedings of the 2019 8th International Conference on Affective Computing and Intelligent Interaction (ACII).

How would people respond to an aged voice assistant?

Tell us a little about voice-based systems.

Voice-based systems cover a number of products, devices, and environments, from smart speakers and digital assistants, like Siri, to the voices of robots or even websites. We’re starting to take up these technologies more and more, so we need to figure out how to design them to meet our needs and expectations, as well as understand how they influence our attitudes and behavior.

At the moment, I'm looking at virtual assistants. Right now, they are kind of boring and gimmicky. Most of the tasks they can complete, they don't need to do. There tends to be a strong initial interest that quickly tapers out. It's not just a matter of bugs and technology problems—it's also a matter of meaningful use, or the lack thereof in this case. I feel that's kind of a shame. So, my work is focused on the future of virtual assistants. What could the design and capabilities of the ideal voice assistant be?

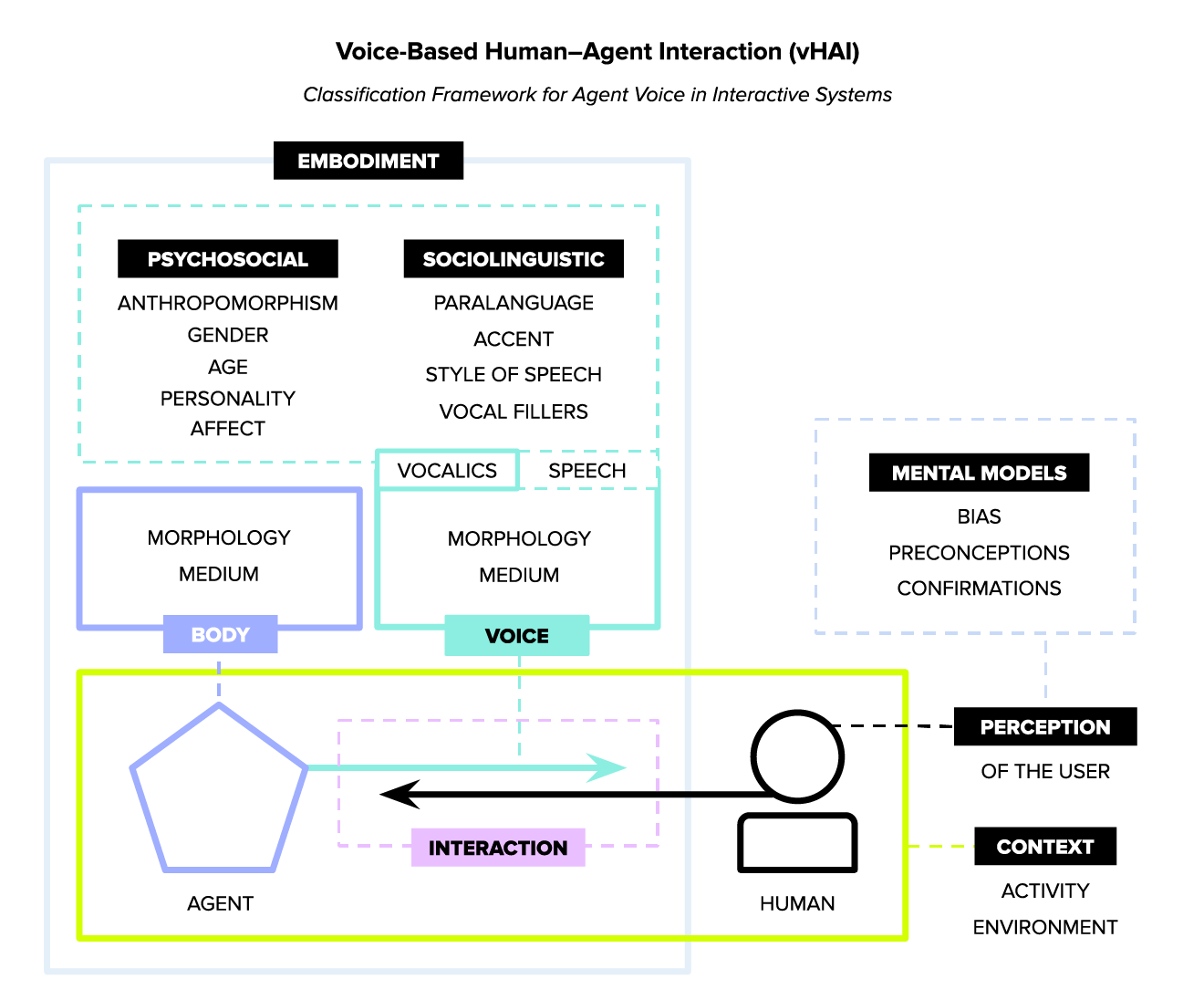

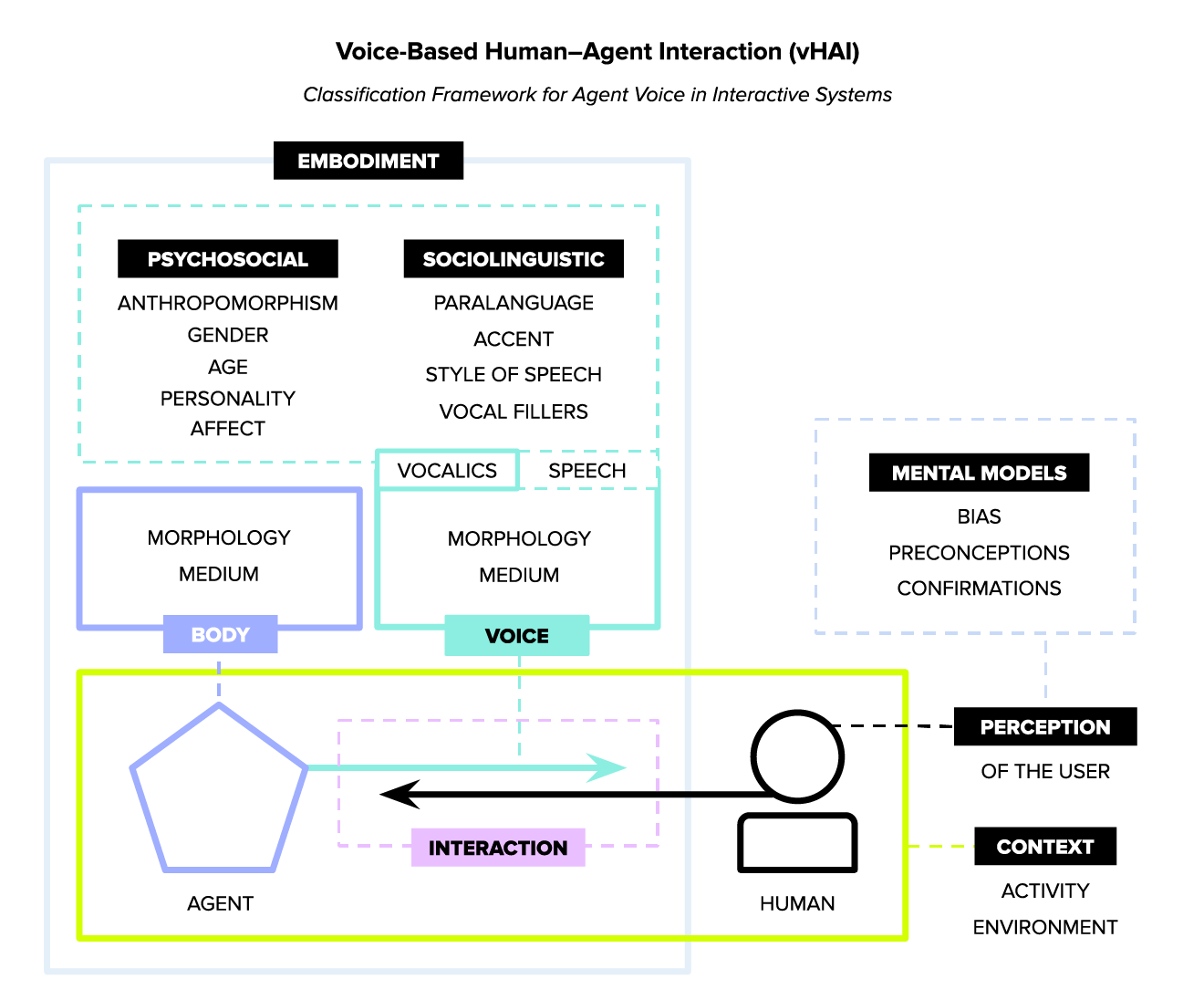

In her recent literature survey for the ACM (Association for Computing Machinery), Seaborn provides a high-level classification framework of voice-based human–agent interaction (vHAI). From "Voice in human–agent Interaction: A Survey" published in ACM Computing Surveys, Vol. 54. No. 4, 2021.

There's also a critical social aspect to voice assistants that we can't ignore. Voice, as an expressive medium, conveys an array of meaningful characteristics like age, gender, and personality, which are called psychosocial characteristics. It also conveys what's called sociolinguistic characteristics, which refer to rhythm, dialect, and style of speech. These characteristics are deeply tied to human perception, psychology, and cognition within human-human communication. But voice is still an emerging factor in the design of machines, so consensus on how to evaluate and explore voice-based technologies is still in progress. People also react differently to voice technologies when they come out of a computer speaker or smartphone as compared to a human-like robot. We need to not only classify these differences and pinpoint where they come from, but also explore our biases and preconceptions when interacting with these voice-based technologies.

A lot of research has been published in the last few years about the gender bias in voice-based agents and interfaces. As I mentioned, there's an age bias as well. Other forms of bias are starting to be recognized, including based in racial and ethnic codes, accents, and social class. A couple of years ago, I worked on a project that used storytelling to prevent cognitive decline in older adults. The "voice" we used was that of a 70-year-old man, and naturally, that influenced how our listeners interacted with the program. But what was the nature and extent of the influence? This is something that I'm keen to explore. I recently received a grant for research on virtual assistants that have the voice of older adults. I want to study how people of all ages, but especially older adults, respond to an aged computer voice and what they expect out of such an assistant. Will human models of bias against older adults play a role? What might longitudinal interactions with the technology tell us about ourselves? Could bias against older adults be disrupted or shifted through positive, meaningful, and regular interactions with such a system? We're only just starting to ask such research questions in this area of study.

The boundaries between humans and technology are fluid

Speaking of the social aspects of technology, you recently proposed a brand-new threshold for artificial intelligence that achieves "sociality." Can you explain the concept behind it?

This work was inspired by a simple question—what is (and is not) artificial intelligence (AI)? Traditional AI focused on algorithms to perform actions or produce outputs, but now the idea of "embodied" AI is taking hold. Embodied AI basically means that the AI is interacting with some world—usually the physical world, but also virtual worlds—and learning from these interactions to continually improve its functioning. Nowadays, these embodied AI, like voice assistants and robots, are increasingly being used to carry out tasks for and support decision-making in people. This is a social activity of communication between one agent and another: in this case, the embodied AI and a person. When the person perceives and reacts to the embodied AI as if it is a social agent, then it becomes "socially embodied." It's crossed the threshold. At least temporarily.

That was the idea—what we call the Tepper line. When a machine crosses the Tepper line it is seen as "social," but it can just as easily be punted back if its interactions fail to be socially convincing to the user. Whether or not a machine is perceived as social depends on the person interacting with it. And this perception may not hold up over time. As the interaction plays out—from moment-to-moment or over several interactions—the embodied AI may make mistakes that reveal its artificiality. Suddenly it loses that social quality. But it can recover. People are predisposed to perceiving sociality in interactive agents—even non-human ones. This is why I named the line after Sheri S. Tepper, an award-winning science fiction author who explored how boundaries between humans and non-humans, including alien species and technology, are constructed and fluid. The Tepper Line can also apply to the diversity of AI-based technologies out there.

Important questions are being lost in the momentum behind gamification

You also mentioned that you work in games and play. Could you tell us more about that?

One field of study that I've worked within is called "gamification," and it basically describes the intentional use of game elements to create a gameful experience in non-game tasks and contexts. A common example is when an app provides points and badges for tasks that can be treated as achievements. Game elements are mostly inspired by modern computer games and video games, so there are many, many near-universal elements, like points and leaderboards, but there are sometimes roleplaying elements too, like when you choose a character class and have levels where you progress. Researchers have been looking at individual differences in people who play games and use gamified platforms. People have different interpretations, interests, and motivations when it comes to gamification. These can be based on previous experience with games, age, personality—there are a wide variety of factors involved.

One of the things I'm concerned about is the removal of gamification. Questions were raised early on about the effects of gamification. Will positive effects last? Could negative effects materialize as time passes? But nearly ten years on there's still no answer. We already have many gamification elements in things we use in daily life, like say, the educational system, where you do have achievements, move up levels, and so on. But much of the research is focused on the addition of gamification elements. What we need to find out is—what happens when we take gamification away? What happens when we "ungamify"? Maybe different people will react differently to gamification or its removal, but how? What factors into these reactions?

I'd love to get my fellow researchers interested in this and put together a panel or workshop on the subject, because there is such a dearth of research, as a recent small literature survey of mine showed. Years ago now, myself and other researchers expressed some concern about whether gamification does no harm. It may just be that there is so much momentum behind gamification right now that we are still focusing on the most obvious research questions, and not the deeper impact.

I'm developing my own independent tracks

Your work touches upon a wide range of topics. What inspires you to work on such diverse subjects?

Much of my research so far has been driven by the people I've had the privilege of working with throughout my research career, as well as the academic resources we've had at our disposal. I also read a lot and widely, from general science magazines to science fiction. Many sci-fi authors look at the deeper human questions of technology, which helps me think of things from a different perspective. I'm now working on developing my own independent tracks in response to the latest topics and technologies in human–computer interaction.

Thinking beyond the interface

What got you started on your research journey?

I started working as an amateur web developer in my teens when the internet was still new and computers weren't commonly found in the home. But towards the end of the ‘90s, people started to see that the web might be the future. I started making websites as a creative endeavour. Then my parents' friends started to ask me to build them websites for their businesses. Ultimately, it was these experience that led to my career in human–computer interaction.

During my undergraduate degree, I worked for the Government of Canada and in private sector, where I was creating the interfaces that people would interact with when they used a website or app. When we tested our products with people, I found myself thinking beyond the interface. What was the larger impact of our technology on people and societies? How could we find out? This led me back to graduate studies. That was when I had my first opportunity to do research and I really loved it. I then moved across Canada to Toronto and started a Ph.D. at the University of Toronto. There is a special school called the Knowledge Media Design Institute (KMDI), a really multidisciplinary space where I was able to work with a lot of different people who had different skills and perspectives. This inspired all of us to think about technology differently. After my Ph.D. I held a few postdoctoral research positions in the UK and Japan, including at University College London Interaction Centre (UCLIC), the University of Tokyo, and RIKEN Center for Advanced Intelligence Project (AIP). When I finished my postdoc tenure, I joined Tokyo Tech as an associate professor and started my own lab.

Seaborn develops the mixed reality game for her doctoral research with the support of Profs. Deb Fels and Peter Pennefather, her mentors. Circa 2016, The University of Toronto, Toronto, Canada.

Tokyo Tech has the "human factor"

Why did you choose Tokyo Tech?

What drew me to Tokyo Tech was the industrial engineering department, which offered a program in human factors engineering as well as complementary programs focusing on computer systems. Typically, when you find human–computer interaction at universities, it's a small subset of a larger department, maybe one or two courses, or in a specific lab. I was very excited to find a specialization at Tokyo Tech! I knew I would have access to top-class technical ability and the opportunity to share my multidisciplinary background—the perfect combination for my field of study.

Another reason why I moved to Japan was because of its super ageing society. Since Japan also continues to be a world-recognized leader in technology, it made sense to come here. At the same time, Japan has little diversity and representation, which is a massive problem socially, as well as for research and design. Yet, Tokyo Tech wanted to hire me, an English-speaking Western woman and visible foreigner of a certain age, as a research leader and educator in its engineering school. In Tokyo Tech, I see a place where new forms of leadership and innovation can emerge. I hope that my lab will contribute to this vision.

People need to evaluate how they embed their beliefs into AI

Speaking of the "human factor," how do you predict our interactions with machines will change in the future?

Right now, there is a shift from the traditional idea of "interaction" as merely input/output facilitated by computers towards a more comprehensive and critical perspective that recognizes people embed their own values, beliefs, and socially constructed understandings of the world into machines. We may be able to understand ourselves and our limitations better if we critically reflect on the technologies we create and how we interact with them.

A personal hope I have for the future is for non-sentient AI to become smart enough to take over the psychologically difficult and harmful tasks that humans must currently do. For instance, people have to manually curate social media content to find and remove hate speech, violent content, and illicit materials. At the same time, we must be careful to be consciously and conscientiously "in the loop" when it comes to the progression of these AI. We're shaping these AI and deciding what constitutes illicit and what doesn't. If we don't recognize this, we are ultimately doing more harm than good. Already we have social media algorithms hiding content with "ugly" people, breast-feeding mothers, Indigenous peoples and people of color, and queer people, for no clear or consistent reason. I can only hope that I can help shape a society that we can all be happy living in, by pursuing lifelong learning and striving for integrity in the work that I do.

Research is my ikigai

Finally, what advice do you have for students aspiring to work with you or in your field?

Research sometimes proceeds slowly, bit by bit, with unpredictable setbacks. I'm someone who can't stop thinking about research and coming up with new ideas to explore regardless of any setbacks or a slow pace. It's my ikigai: a Japanese concept that refers to the things that give your life meaning and purpose (although this might just be my severely limited Western interpretation of the term). But I believe in seeking out that meaning and purpose. I want to contribute to knowledge. I'm not satisfied with superficial conclusions or the absence of an answer. I want to know and know deeply. If you feel this way, then the research life may be for you.

I love to work with people who think differently and are open to change—including changing your own mind. People who are not content with the status quo and have a vision of how to change it. People who have a range of skills and perspectives and are willing to develop technical abilities as well. Ultimately you need to have vision, drive, technical skill, and a desire to make waves.

At Tokyo Tech, if you are in the fourth year of your undergraduate degree, you can join a lab to experience research in your department, which will give you the opportunity to understand what it means to be in a lab and do research.

Making it as a researcher requires bravery, determination, creativity, and skill—as well as a good dose of luck and favorable circumstances. But, as my friend and mentor Peter Pennefather (Professor Emeritus of the University of Toronto) says, if you're someone who "just can't help themselves" when it comes to research, you'll succeed.

Katie Seaborn

Associate Professor, Department of Industrial Engineering and Economics, School of Engineering

- April 2020-Associate Professor, Department of Industrial Engineering and Economics, School of Engineering

- April 2020-Visiting Researcher, RIKEN Center for Advanced Intelligent Project (AIP)

- 2019-2020Postdoctoral Researcher, RIKEN Center for Advanced Intelligent Project (AIP)

- 2018-2019JSPS Postdoctoral Researcher at the University of Tokyo

- 2018-Honourary Fellow at the University College London Interaction Centre (UCLIC)

- 2017-2018Postdoctoral Research Associate, UCLIC

- 2017Teaching Assistant at University College London

- 2016Completed Ph.D. in Mechanical and Industrial Engineering with a specialization in Human Factors and Knowledge Media Design at the University of Toronto

- 2015Recipient of the Summer Program Fellowship for Foreign Researchers, JSPS

- 2011Completed M.Sc. in Interactive Arts & Technology at Simon Fraser University

- 2009Completed B.A. in Interactive Arts & Technology at Simon Fraser University

- 2007-2008Web Programmer at Public Works & Government Services Canada (PWGSC)

- 2001-Freelance web development and design

NEXT generation is a new series about emerging researchers, working at the forefront of science and technology as they envision the impact their discoveries will have on society's future.

The Special Topics component of the Tokyo Tech Website shines a spotlight on recent developments in research and education, achievements of its community members, and special events and news from the Institute.

Past features can be viewed in the Special Topics Gallery.

. Any information published on this site will be valid in relation to Science Tokyo.