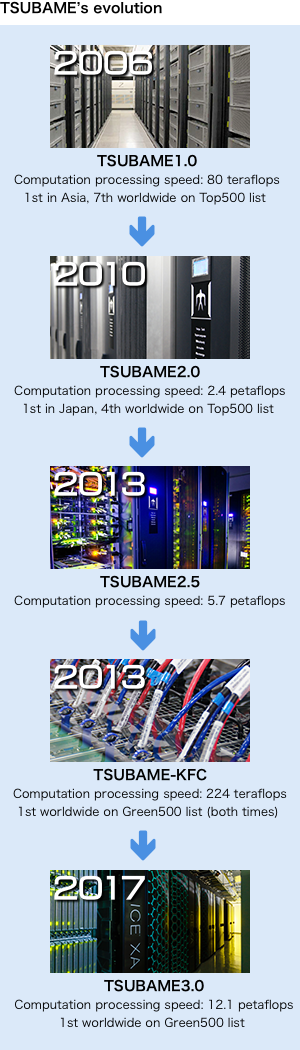

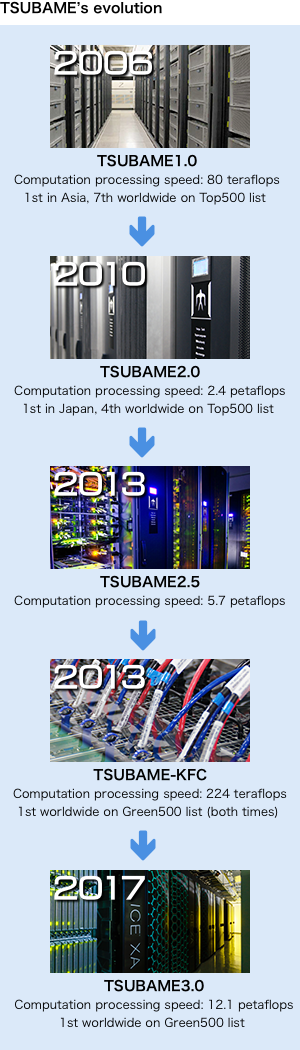

Matsuoka started research on cluster supercomputers in 1996. On the basis of his findings, he started full-scale development of TSUBAME in 2004. Since the first production startup in March 2006, the TSUBAMEseries has been recognized as demonstrating the leading edge of supercomputing technology. The second incarnation of the machine — TSUBAME2.0 — went into production in November 2010, and was followed by the TSUBAME2.5 upgrade in September 2013. The brand new TSUBAME3.0 was installed and began production in August 2017.

"This was the third time that TSUBAME ranked No.1 on the Green500 list. TSUBAME-KFC,3 which was developed as an experimental prototype for TSUBAME3.0, was ranked No.1 in November 2013 and June 2014. This, however, is the first time it was rated No.1 in the world as a petaflops-scale production supercomputer, which is very meaningful for us," says Matsuoka.

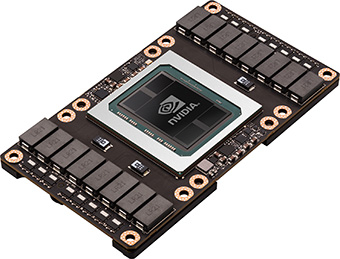

The Green500 list assesses power efficiency based on processing speed4 per 1 W of power consumption. This figure does not include the power required to cool the supercomputer, which is a significant addition to the base machine power in actual use, sometimes almost equaling the machine power itself. Cooling the TSUBAME3.0, however, requires only 3 percent of total power consumption. This is roughly one tenth of that required by other supercomputers, proving that Matsuoka's creation is truly a practical supercomputer offering extremely high power efficiency.

TSUBAME also boasts world-class processing speed. TSUBAME2.0 was ranked No.4 in the world and No.1 in Japan in the Top500 list in November 2010, when it began service. In addition, Assistant Professor Takashi Shimokawabe and Associate Professor Akira Nukada at GSIC created the world's first detailed simulation of alloy crystallization utilizing TSUBAME2.0. Professor Matsuoka's remarkable achievements were recognized with an ACM Gordon Bell Prize, the most prestigious award in the field of supercomputers, jointly with Shimokawabe and his supervisor and joint researcher Professor Takayuki Aoki, along with Nukada.

TSUBAME has contributed to high-performance computing (HPC) with remarkable processing speed, power efficiency, and low cost. The newest version, TSUBAME3.0, is also expected to achieve top performance in Japan in simulation science, as well as in new workloads such as AI and big data processing.

Matsuoka explains. "Theory and experimentation have traditionally played significant roles in scientific development. Recently, however, computational science represented by computer simulation has come to play a significant role as the third wave in science, and moreover, data science has rapidly attracted attention as the fourth wave of science. TSUBAME3.0 realizes the high-performance application of data science ahead of other supercomputers, and this has attracted attention around the globe."

. Any information published on this site will be valid in relation to Science Tokyo.